Artificial Intelligence transcription

12 June 2025

Recent advancements in Artificial Intelligence (AI) transcription have evolved from simple dictation to the efficient and accurate recollection of conversations that can be summarised, interrogated, and reformatted.

This article examines some of the main legal risks associated with the use of AI transcription tools, particularly concerning privacy, confidentiality, surveillance, and intellectual property. Organisations intending to use AI transcription tools, whether for internal purposes or in interactions with customers, patients, or clients, should be aware of these ethical and legal issues.

How do AI transcription tools differ from conventional transcription tools?

AI transcription tools generally operate through one of two ways:

- Online Video Conferencing: audio is recorded using software and then downloaded.[1]

- Face-to face: a microphone is used to capture speech, which is then converted into text.[2]

These tools differ from conventional transcription devices because they utilise machine learning, which allows them to adapt without explicit instructions. They also incorporate natural language processing, enabling them to understand dialects, accents, and colloquialisms, and automatic speech recognition to convert audio into written text.[3] AI tools can also be used to translate audio into multiple languages.

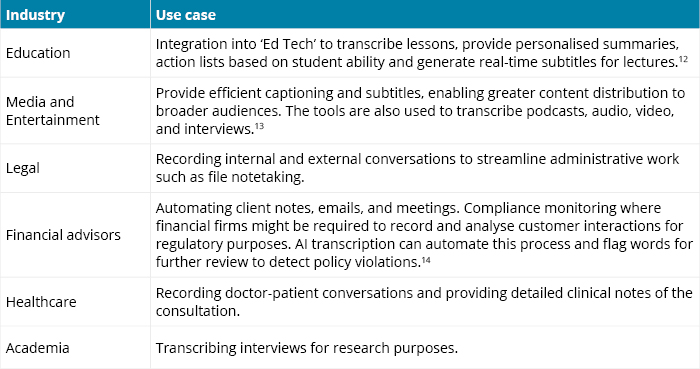

Use cases

AI transcription tools have been widely adopted across various industries.[4] They are used by organisations that traditionally rely on transcription services, such as call centres as well as companies that integrate transcription functionalities into their existing products, like Webex and Microsoft Teams (which uses Microsoft Co-pilot to transcribe meetings).[5] Below are some additional emerging use cases of AI transcription:

What legal issues arise from AI transcription use?

The use of AI transcription raises legal issues around privacy, confidentiality, surveillance, and intellectual property.

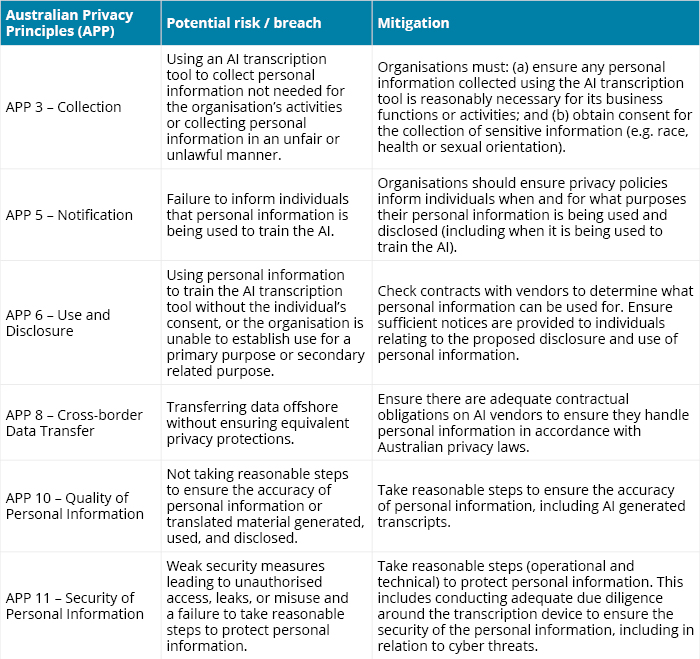

Privacy risks

The Privacy Act 1988 (Cth) outlines the requirements for the collection, use, disclosure, and storage of personal information. Below is a summary of key privacy risks associated with the use of AI transcription.

Depending on the circumstances, a breach of the Australian Privacy Principles (APPs) can be considered a ‘serious’ or ‘repeated’ interference with an individual’s privacy.[6] In addition, organisations should be mindful of the new tort for serious invasions of privacy commencing 10 June 2025. This tort highlights the importance for organisations to collect, use, and disclose personal information appropriately and, where applicable, with the individual’s consent.

Risk of confidentiality breaches and the internal use of AI transcription tools

AI models often struggle to understand the context of the information they receive. As a result, confidential information is typically not identified as such and is treated the same way as less sensitive information. This increases the risk of confidentiality breaches for businesses that adopt internal AI transcription tools. Example risks include:

- AI tools using confidential information to train their models, leading to the potential inclusion of confidential information in generated outputs.

- Unauthorised distribution or access to conversation transcripts by third parties who are not permitted to have access to that information (for example, uploading meeting minutes to the corporation’s practice management system without proper access restrictions, or sending automated emails to stakeholders with transcripts that have not been vetted for confidential information).

Organisations should consider implementing measures to mitigate these risks, such as ensuring appropriate access protocols are in place and that records are automatically deleted after the prescribed retention period. They might also explore whether certain automatic functions within the AI transcription tool can be disabled.

Do state and Commonwealth surveillance laws apply?

In Australia, states and territories have strict regulations regarding the audio recording of conversations. The legislation differs from state-to-state; however, it is generally considered a criminal offence to record private conversations or to disclose recordings of private conversations without the consent of both parties’ consent (with some exceptions[7]).[8] Organisations should typically obtain informed consent before recording a conversation with an individual. Informed consent means that individuals are fully aware they are being recorded and understand the consequences of that recording, including how the information obtained will be used and what it will be disclosed for if consent is granted.

Ownership of and ongoing right to use AI generated material?

In Australia, there is currently no law governing the ownership of intellectual property (IP) for AI-generated works. Under existing laws, copyright protection requires a human author who contributes independent intellectual effort. Similarly, the Patents Act 1990 (Cth), requires there to be a human inventor. In fact, ownership of IP rights in AI-generated material could potentially be attributed to various individuals involved in the content’s generation, including the developer or deployer of the AI transcription tool, or the person who input the data used by the AI for generating the output.

Ownership of the IP in generated outputs may also depend on specific circumstances and the types of information input into the AI system. Organisations procuring an AI transcription tool should ensure that they own the IP rights in any outputs or have a perpetual licence to use such outputs.

Mitigating and managing risk – Responsible AI governance

Establishing effective AI governance frameworks and conducting AI impact assessments are important for managing the risks associated with AI usage. This includes ensuring the ethical and responsible deployment of AI systems. An AI governance framework should ideally be structured around the AI Ethical Principles. In addition, AI impact assessments can be used as an ancillary assessment tool, typically comprising three components:

- Assessment: identifying potential risks and determining whether a more comprehensive assessment is required.

- Action plan: setting clear goals and providing measures to track performance and report impacts.

- Report template: a precedent report to help users summarise risks, publish assessment results, and share learnings.

The goal of an AI impact assessment is not to eliminate risk but to highlight the risks and benefits of the AI, balancing them against corporate and business objectives for introducing the AI. Organisations should consider the Voluntary AI Safety Standards and Proposed Mandatory Guardrails for high-risk AI when planning their assessments.

Future of AI transcription tools

While recent advances in AI-driven transcription tools have led to efficiency gains for users, risks related to privacy, confidentiality, and IP must be managed carefully. This ensures that the benefits of AI transcription tools are realised in a legally compliant and ethical manner.

[1] Gabrielle Samuel and Doug Wassenaar, 'Joint Editorial: Informed Consent and AI Transcription of Qualitative Data' (2024) 20(1-2) Journal of Empirical Research on Human Research Ethics.

[2] A Baki Kocaballi et al. ‘Envisioning an artificial intelligence documentation assistant for future primary care consultations: A co-design study with general practitioners’ Journal of the American Medical Informatics Association 27 (11) (2020) 1695–1704.

[3] Verbit ‘AI Transcription: A Comprehensive Review' (Web Page, 2025)

[4] NSW Supreme Court, Practice Note SC Gen 23: Use of Generative Artificial Intelligence (Gen AI).

[5] Microsoft, 'Copilot in Microsoft Teams Meetings and Events' (Web Page, 2025)

[6] StoryShell, 'The Game-Changing Impact of AI-Powered Translation, Transcription, and Dubbing in the Media and Entertainment Industry: A Case for Czech and Bulgarian Markets' (Web Page, 2025)

[7] Way With Words, 'Exploring Use Cases for Speech Data in AI' (Web Page, 2025)

[8] Milvus ‘What are the use cases of speech recognition in financial services’